Gnucomo - Computer Monitoring

Design description

Arjen Baart

<arjen@andromeda.nl>

Brenno de Winter

<brenno@dewinter.com>

Peter Roozemaal

<mathfox@xs4all.nl>

November 26, 2003

| Document Information |

|---|

| Version |

0.6 |

| Organization |

Andromeda Technology & Automation |

| Organization |

De Winter Information Solutions |

Abstract:

Table Of Contents

1 Introduction

Gnucomo is a system to monitor computer systems.

This document describes the design of the Gnucomo applications

and is based upon the development manifest.

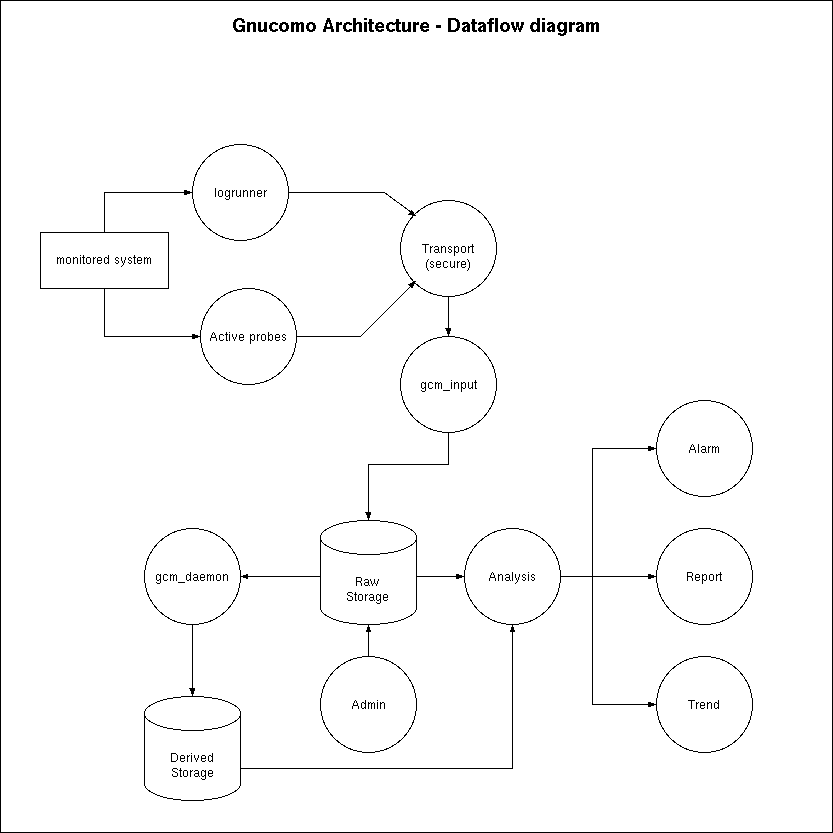

2 Architecture

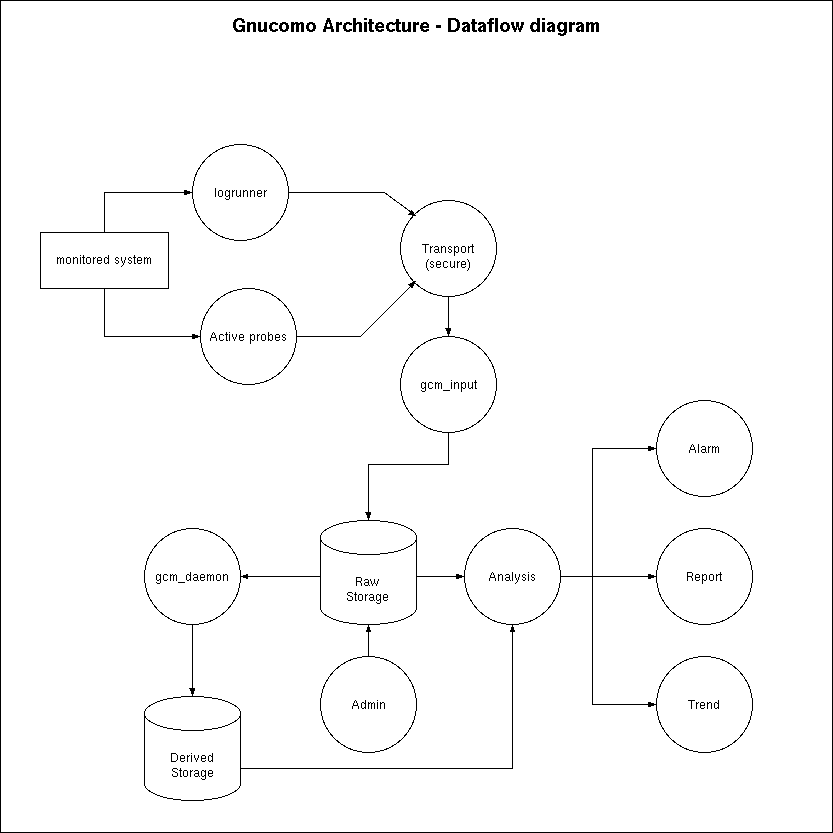

The architecture of gnucomo is shown in the

data flow diagram below:

At the left of the diagram, information is aquired from the monitored system.

Several agents can be used to obtain information from this system, in

active or passive ways.

A passive agent uses information which is available on the system anyway,

such as log files or other lists.

An active agent, requests explicit data from the monitored system.

One example of a passive agent is logrunner, a program which

monitores system log files and sends regular updates to the gnucomo

server.

The agents on the monitored system send the data to some kind of transportation channel.

This can be any form of transport, such as Email, SOAP, plain file copying or

some special network connection.

If desired, the transportation may provide security.

Once arrived at the server, the information from monitored systems is captured

by the gcm_input process.

This process can obtain the data through many forms of transport and from

a number of input formats.

Gcm_input will try to recognize as much as possible from an

input message and store the obtained information into the Raw Storage

database.

The Raw Storage data is processed further and analyzed by

the gcm_daemon, which scans the data, gathers statistics and

stores its results into the Derived Storage database where

it is available for human review and further analysis.

Architectural items to consider:

- Active and passive data acquisition

- Monitoring static and dynamic system parameters

- Upper and lower limits for system parameters

Existing log analysis tools: logwatch, analog.

3 System design

Gnucomo is a collection of different application programs,

rather than a single application.

All these application programs revolve around the Gnucomo

database as described in the manifest.

3.1 Database design

The design of the database is described extensively in

the Manifest.

Assuming development is done on the same system on which the real (production)

gnucomo database is maintained, there is a need for a separate database

on which to perform development and integration tests.

Quite often, the test database will need to be destroyed and recreated.

To enable testing of gnucomo applications, all programs

need to access either the test database or the production database.

To accommodate this, each application needs an option to override the

default name of the configuration file (gnucomo.conf).

To create a convenient programming interface for object oriented languages,

a class gnucomo_database provides an abstract layer which

hides the details of the database implementation.

An object of this class maintains the connection to the database server

and provides convenience functions for accessing information in the

database.

A constructor of the gnucomo_database is passed a reference to

the gnucomo_configuration object in order to access the database.

This accommodates for both production and test databases.

The constructor will immediately try to connect to the database and check its

validity.

The destructor will of course close the database connection.

Other methods provide access to the database in a low-level manner.

There will be lots more in the future, but here are a few to begin with:

- Send a SQL query to the database.

- Read a tuple from a result set.

- Obtain the userid for the current database session.

The information stored in the database as tuples is represented by classes in

other programming languages such as C++ of PHP.

Each class models a particular type of tuple (an entity)

in the database.

Such classes maintain the relation with the database on one end,

while providing methods that are specific to the entity on the other end.

All database communication and SQL queries are hidden inside the

entity's class.

This includes, for example, handling database result sets and access control.

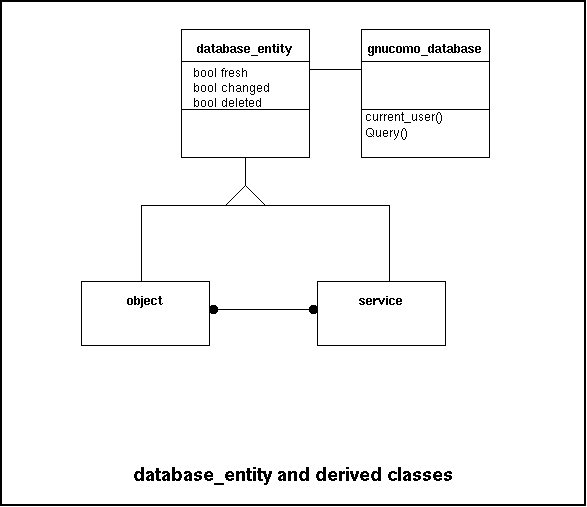

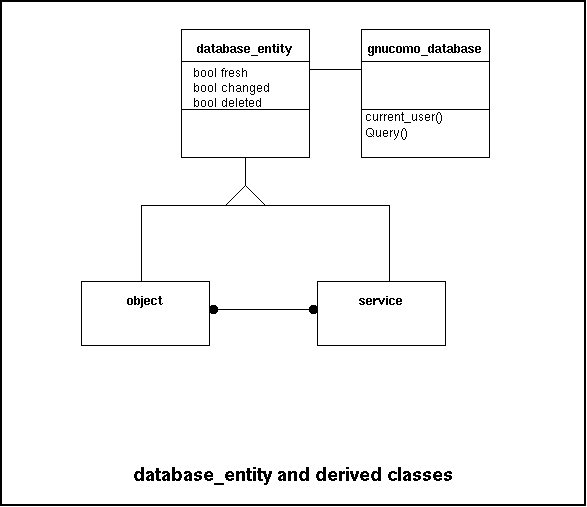

Properties and operations that are common to all classes that represent

entities in the database are caught in a common base class.

The base class, named database_entity provides default

implementations for loading and storing tuples, construction and destruction

and iteration.

Most derived classes will override these functions.

Two examples of classes that represent entities in the database

are object and service.

Both are derived from a database_entity, as show below:

Constructors of classes derived from database_entity come in

two variaties: with or without database interaction.

Constructors that do not interact with the database have only one argument:

a reference to the gnucomo_database object which handles

the low-level interaction with the database server.

The example below shows a few of these constructors:

database_entity::database_entity(gnucomo_database &gdb)

object::object(gnucomo_database &gdb)

service::service(gnucomo_database &gdb)

The objective of this type of constructor is to cerate a fresh tuple and

store it in the database later on.

All these constructors do is establish the connection to the database

server and fill in the defaults for the fields in the tuple.

A destructor will put the actual tuple into the database if any

information in the object has changed.

This mey be by sending an INSERT if the object is completely fresh

or an UPDATE if an already existing tuple was changed.

The state information about the freshness of an object is a property

common to all database entities and is therefore maintained in

the database_entity class.

Constructors that do interact with the database accept additional

arguments after the initial gnucomo_database reference.

These extra arguments are used to retreive a tuple from the database.

Examples of such constructors are:

object::object(gnucomo_database &gdb, String hostname)

object::object(gnucomo_database &gdb, long long oid)

service::service(gnucomo_database &gdb, String name)

The set of arguments must of course correspond to a set of fields that

uniquely identify the tuple.

The primary key of the database table would be ideally suitable.

If the tuple is not found in the database, data members of the object

are set to default values and the object is marked as being fresh and

not changed.

Methods with the same name as a field in a tuple read or change the

value of that field.

Without an argument, such a method returns the current value of the field.

With a single argument, the field is set to the new value passed in the

argument and the method returns the original value.

Whenever a field is set to a new value, the object is marked as being

'changed'.

A destructor will then save the tuple to the database.

3.2 Configuration

Configuration parameters are stored in a XML formatted configuration file.

The config file contains a two-level hierarchy.

The first level denotes the section for which the parameter is used

and the second level is the parameter itself.

Both sections and parameters are elements in XML terminology.

The top-level (root) element is the configuration tree itself.

The root element must have the same name as the application's name

for which the configuration is intended.

The level-1 elements are sections of the configuration tree.

Within these section elements, the configuration has several parameter elements.

Each parameter is an element by itself.

The element's name is the name of the parameter, just as a section

element's name is the name of the section.

The content of the parameter element is the value of the parameter.

Attributes in either section elements or parameter elements are not used.

The configuration file is located in two places.

The is a system wide configuration in

/usr/local/etc/gnucomo.conf and each user may

have his or her own configuration in ~/.gnucomo.conf.

The value of a user-specific configuration parameter overrides

the system-wide value.

The following sections and parameters are defined for the Gnucomo

configuration:

- database

- type

- name

- user

- password

- host

- port

- logging

- gcm_input

- gcm_daemon

The database section defines how the database is accessed.

The database/type parameter must have the content PostgreSQL.

Other database systems are not supported yet.

The database/user and database/password provide default

login information onto the database server.

Specific user names and passwords may be specified for separate applications, such

as gcm_input and gcm_daemon.

3.2.1 gnucomo_config class

Each Gnucomo application should have exactly one object of the

gnucomo_config to obtain its configuration

parameters.

The following methods are supported in this class:

-

read(String name)

Reads the XML formatted configuration files from

/usr/local/etc/<name>.conf and

~/.<name>.conf.

-

String find_parameter(String section, String param)

Return the value of the parameter param in

section section.

-

String Database()

Return the database access string to be used for the PgDatabase constructor.

3.3 gcm_input

gcm_input is the application which captures messages from client

systems in one form or another and tries to store information from these messages

into the database.

A client message may arrive in a number of forms and through any kind of

transportation channel.

Here are a few examples:

- Obtained directly from a local client's file system.

- From the output of another process, through standard input.

- Copied remotely from a client's file system, e.g. using

ftp, rcp or scp.

This is usually handled through spooled files.

- Through an email.

- As a SOAP web service, carried through HTTP or SMTP.

- Through a TCP connection on a special socket.

On top of that, any message may be encrypted, for example with PGP or GnuPG.

In any of these situations, gcm_input should be able to extract

as much information as possible from the client's message.

In case the message is encrypted, it may not be possible to run gcm_input

in the background, since human intervention is needed to enter the secret key.

The primary function of gcm_input is to store lines from a client's

log files into the log table or scan a report from a probe and update

the parameter table.

To do this, we need certain information about the client message that is usually not

in the content of a log file.

This information includes:

- The source of the log file, most often in the form of the client's hostname.

- The time stamp of the time on which the log file arrived on the server.

- The service on the client which produced the log file.

Sometimes, this information is available from the message itself, as in an email header.

On other occasions, the information needs to be supplied externally,

e.g. by using command line options.

In any case, this type of 'header' information is relevant to the message

as a whole.

As a result, gcm_input can accept one and only one message at a time.

For example, it is not possible to connect the standard output of

logrunner to the standard input of gcm_input and have

a continuous stream of messages from different log sources.

Each message should be fed to gcm_input separately.

Also when logrunner uses a special socket to send logging data,

a new connection must be created for each message.

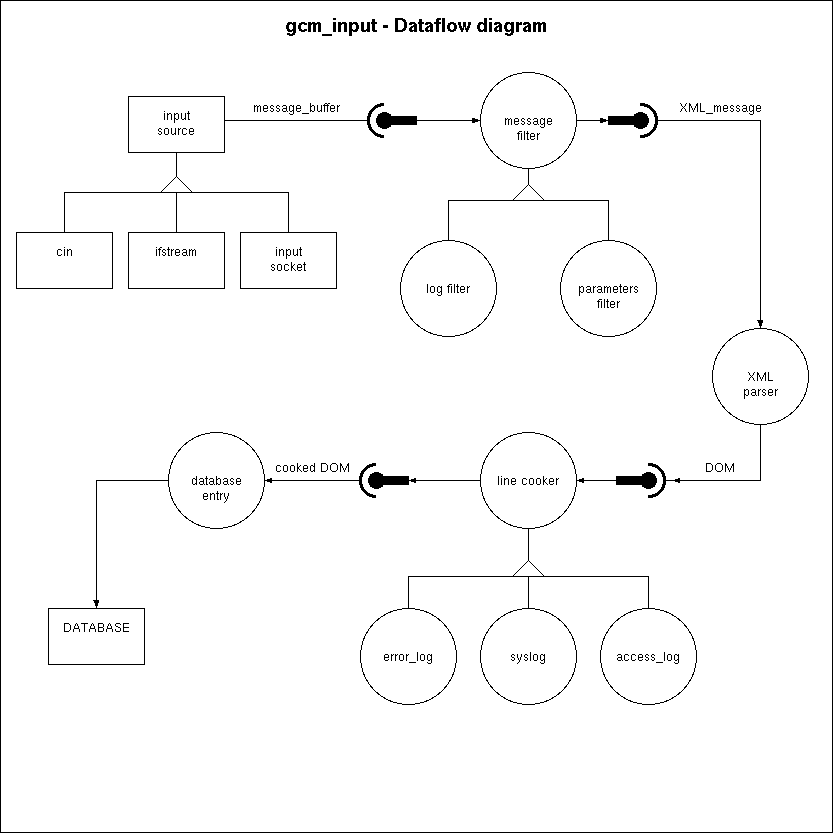

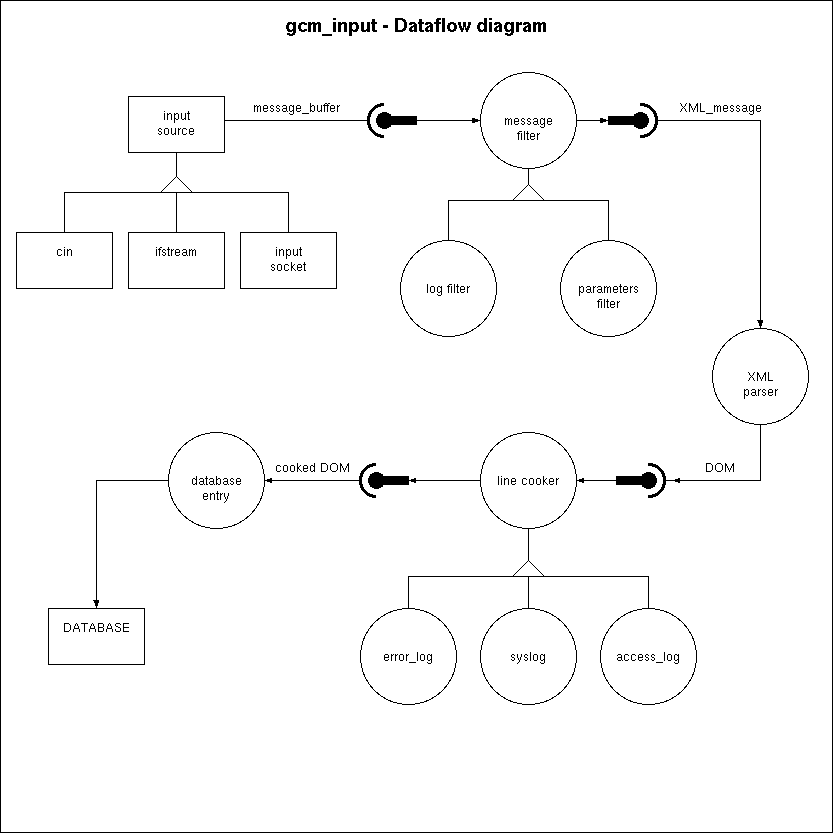

The dataflow diagram below shows how a message travels from the input source

to the database.

Internally, gcm_input handles XML input

and each input item must have its data fields split into appropriate XML elements.

When data is offered in some other form, this data must be filtered

and transformed into XML before gcm_input can handle it.

Two levels of transformation are possible.

At the highest level, the whole message is transformed into an XML

document with a <message> root element and the

appropriate <header> and <data>

elements, all of which are put in the proper namespace.

At the lowest level, each line of the message's data can be transformed

into a <cooked> <log> element.

Two classes of replaceable filter objects take care of these transformations.

Depending on the content of the message and/or command line options to

gcm_input, an appropriate filter object is inserted into

the data stream.

The message_filter transforms

the raw input data into an XML document.

The XML document is processed by the XML parser and stored into the database

or saved into a spool area for later processing.

The latter happens, for example, when the database is unavailable.

The task of the message_filter object is to create the <header>

elements and the <data> element containing either a <log> or

a <parameters> element, along with all their child elements.

To do this, a message_filter object must work closely

together with a line_cooker object.

There are two major classes of message_filter objects:

one to create a <log> element and one to create a <parameters>

element.

Either one of these must be capable to create a <header> element which

is filled with information from command line arguments or an email header

in the input stream.

The base message_filter is not much more than a short circuit,

which merely copies the input stream into the internal XML buffer.

This is used when the input is already in XML format.

The line_cooker

operates on a node in the DOM tree which is

supposed to be a <raw> <log> element that contains one line

from a log file.

The line_cooker transforms a raw log line into

its constituent parts that make up en <cooked> element.

Since each type of logfile uses a different layout and syntax,

different line cookers can be used, depending on the type of log.

This type is indicated by the <messagetype> element in the header

part of the message.

Clearly, the line_cooker is a polymorphic entity.

Exactly which line_cooker is used is determined through

classifying

the content of the message or the message type indicated in the header.

The line_cooker base class provides a default implementation

for most methods, while derived classes provide the actual cooking.

Ouput created by gcm_input for logging and debugging purposes

can be sent to one of several destinations:

- standard error.

- a log file.

- the system log.

- an email address.

The actual destination is stated in the gnucomo

configuration file. The default is stderr.

A log object filters output according to the debug level.

3.3.1 Classifying messages

Apart from determining information about the client's message, the content

of the message needs to be analyzed in order to handle it properly.

The body of the message may contain all sorts of information, such as:

- System log file

- Apache log file

- Report from a Gnucomo agent or other probe, for example "rpm -qa"

or "df -k".

- Generic XML input

- Something else...

Basically, gcm_input acceepts two kinds of input: Log lines

and parameter reports.

The message is analyzed to obtain information about what the message entails

and where it came from.

The message classification embodies the way in which a message must be

handled and in what way information from the message can be put into

the database.

Aspects for handling the message are for example:

- Strip lines at the beginning or end.

- Store each line separately or store the message as a whole.

- How to extract hostname, arrival time and service from the message.

- How to break up the message into individual fields for a log record.

These aspects are all handled in polymorphic message_filter

and line_cooker classes.

The result of classifying a message is the selection of the proper

objects derived from these classes from a collection of such objects.

The classify() method tries to extract that information.

Sometimes, this information can not be determined with absolute 100% certainty.

The certainty expresses how sure we are about the contents in the message.

Classifying a message may be performed with an algorithm as shown in

the following pseudo code:

uncertainty = 1.0

while uncertainty > ε AND not at end

Scan for a marker

if a marker matches

uncertainty = uncertainty * P // P < 1.0

With uncertainty of course being the opposite of the certainty.

It expresses how unsure we are about the content of the message, as a

number between 0.0 and 1.0.

In fact, it is the probability that the message is not what we think it is.

Initially, a message is not classified and the uncertainty is 1.0.

Some lines point toward a certain type of message but do not absolutely determine

the type of a message. Other pieces of text are typical for a certain message type.

Such pieces of text, called markers are discovered in a message,

possibly by using regular expression matches.

Examples of markers that determine the classification of a client message

are discussed below.

To determine the message type, classify() uses the collection

of line_cooker objects and maintains

the uncertainty associated with each line_cooker object.

A line of input from the message is tested using the line_cooker::check_pattern

method for each line_cookerobject.

When a marker matches, we are a bit more sure about the content of the message

and the uncertainty for that line_cooker object decreases by

multiplying the uncertainty by P, a number between 0 and 1.

This process continues line after line from the input message until the

uncertainty for one of the line_cooker objects is sufficiently low

(i.e. less than a preset threshold, ε).

At the end, the line_cooker object with the lowest uncertainty

is selected.

From - Sat Sep 14 15:01:15 2002

This is almost certainly a UNIX style mail header.

There should be lines beginning with From: and Date:

before the first empty line is encountered.

The hostname of the client that sent the message and the time of arrival

can be determined from these email header lines.

The content of the message is still to be determined by matching

other markers.

-----BEGIN PGP MESSAGE-----

Such a line in the message certainly means that the message is PGP or GnuPG

encrypted.

Decrypting is possible only if someone or something provides a secret key.

<?xml version='1.0'?>

The XML header declares the message to be generic XML input.

The structure of the XML message that gcm_input accepts

is described in the next section.

Sep 1 04:20:00 kithira kernel: solo1: unloading

The general pattern of a system log file is an abbreviated month name, a day,

a time, a name of a host without the domain, the name of a service followed

by a colon and finally, the message of that service.

We can match this with a regular expression to see if the message holds syslog lines.

Similar matches can be used to find Apache log lines or output from the dump

backup program or anything else.

3.3.2 Generic XML input

Since gcm_input can not understand every conceivable form

of input, a client can offer its input in a more generic form which reflects

the structure of the Gnucomo database.

In this case, the input is structured in an XML document that contains the input

data in a form that allows gcm_input to store the information

into the database without knowing the nature of the input.

The XML root element for gcm_input is a <message>, defined

in the namespace with namespace name http://gnucomo.org/transport/.

All other elements and attributes of the <message> must be defined

within this namespace.

Within the <message> element there is a <header>

and a <data> element.

The <data> element may contain the logdata in an externally

specified format.

The <header> element contains a number of elements (fields), some

mandatory, some optional. The text of the element contains the value of

the element.

The following elements have been defined:

-

<mesagetype> mandatory

The type (format) of the logdata in the data element. The message type

determines the way in which raw log elements are parsed and split up

into separate fields for insertion into the database.

The message types gcm_input understands are:

-

system log : The most common form of UNIX system logs.

Also used in most Linux distributions.

-

IRIX system log : Variation of system log, used by SGI.

-

apache access log : Access log of the Apache http daemon,

in default form.

-

apache error log : Error log of the Apache http daemon,

in default form.

There mnust also be a 'generic' system log in case all elements are

cooked already.

-

<hostname> mandatory

The name of the system that generated the data in the data block.

This can be different from the computer composing the message.

-

<service> optional

The (default) value of the service running on the host that

generated the message data. For logfiles that don't contain the

service name embedded in them.

-

<time> optional

The best approximation to the time that the data was generated.

For (log)data that doesn't contain an embedded datestamp.

The following example shows an XML message for gcm_input

with a filled-in header and an empty <data> element:

<gcmt:message xmlns:gcmt='http://gnucomo.org/transport/'>

<gcmt:header>

<gcmt:messagetype>apache error log</gcmt:messagetype>

<gcmt:hostname>client.gnucomo.org</gcmt:hostname>

<gcmt:service>httpd</gcmt:service>

<gcmt:time>2003-04-17 14:40:46.312895+01:00</gcmt:time>

</gcmt:header>

<gcmt:data/>

</gcmt:message>

The data element can hold one of two possible child

elemnts: <log> or <parameters>.

The <log> element may contain any number of lines from

a system's log file, each line in a separate element.

A single log line is the content of either a <raw> or

a <cooked> element.

The <raw> element contains the log line "as is" and nothing more.

This is the easiest way to provide XML data for gcm_input.

However, the log line itself must be in a form that gcm_input

can understand.

After all, gcm_input still needs to extract meaningfull information

from that line, such as the timestamp and the service that created the log.

The client can also choose to provide that information separately by encapsulating

the log line in a <cooked> element.

This element may have up to four child elements, two of which are mandatory:

-

<timestamp> mandatory.

The time at which the log line was generated by the client.

-

<hostname> optional.

For logs that include a hostname in each line. This hostname is checked

against the hostname in the <header> element.

-

<service> optional.

If the service that generated the log is not provided in the <header>

the service must be stated for each log line separately.

Othewise, each log line is assumed to be generated by the same service.

-

<raw> mandatory.

The content of the full log line. This would have the same content of the singular

<raw> element if the log line was not provided in a

<cooked> element.

The following shows an example of the log message with two lines in the

<log> element, one raw and one cooked:

<gcmt:data xmlns:gcmt='http://gnucomo.org/transport/'>

<gcmt:log>

<gcmt:raw>

Apr 13 04:31:03 schiza kernel: attempt to access beyond end of device

</gcmt:raw>

<gcmt:cooked>

<gcmt:timestamp>2003-04-13 04:31:03+02:00</gcmt:timestamp>

<gcmt:hostname>schiza</gcmt:hostname>

<gcmt:service>kernel</gcmt:service>

<gcmt:raw>

Apr 13 04:31:03 schiza kernel: 03:05: rw=0, want=1061109568, limit=2522173

</gcmt:raw>

</gcmt:cooked>

</gcmt:log>

</gcmt:data>

The <parameters> element contains a list of parameters

of the same class. The class is provided as an attribute in the

<parameters> open tag.

There is a <parameter> element for each parameter in the list.

The child elements of a <parameter> are one optional

<description> element and zero or more <property>

elements.

The names of a parameter and a property are provided by the mandatory name

attributes in the respective elements.

The following example shows a possible parameter report from a "df -k":

<gcmt:data xmlns:gcmt='http://gnucomo.org/transport/'>

<gcmt:parameters gcmt:class='filesystem'>

<gcmt:parameter gcmt:name='root'>

<gcmt:description>Root filesystem</gcmt:description>

<gcmt:property gcmt:name='size'>303344</gcmt:property>

<gcmt:property gcmt:name='used'>104051</gcmt:property>

<gcmt:property gcmt:name='available'>183632</gcmt:property>

<gcmt:property gcmt:name='device'>/dev/hda1</gcmt:property>

<gcmt:property gcmt:name='mountpoint'>/</gcmt:property>

</gcmt:parameter>

<gcmt:parameter gcmt:name='usr'>

<gcmt:description>Usr filesystem</gcmt:description>

<gcmt:property gcmt:name='size'>5044188</gcmt:property>

<gcmt:property gcmt:name='used'>3073716</gcmt:property>

<gcmt:property gcmt:name='available'>1714236</gcmt:property>

<gcmt:property gcmt:name='device'>/dev/hdd2</gcmt:property>

<gcmt:property gcmt:name='mountpoint'>/usr</gcmt:property>

</gcmt:parameter>

</gcmt:parameters>

</gcmt:data>

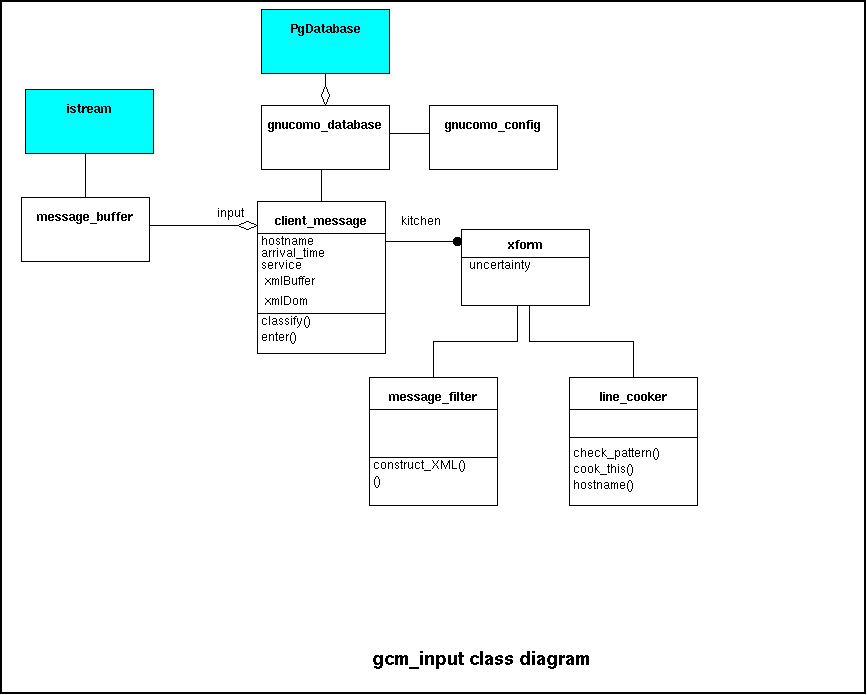

3.3.3 Gcm_input classes

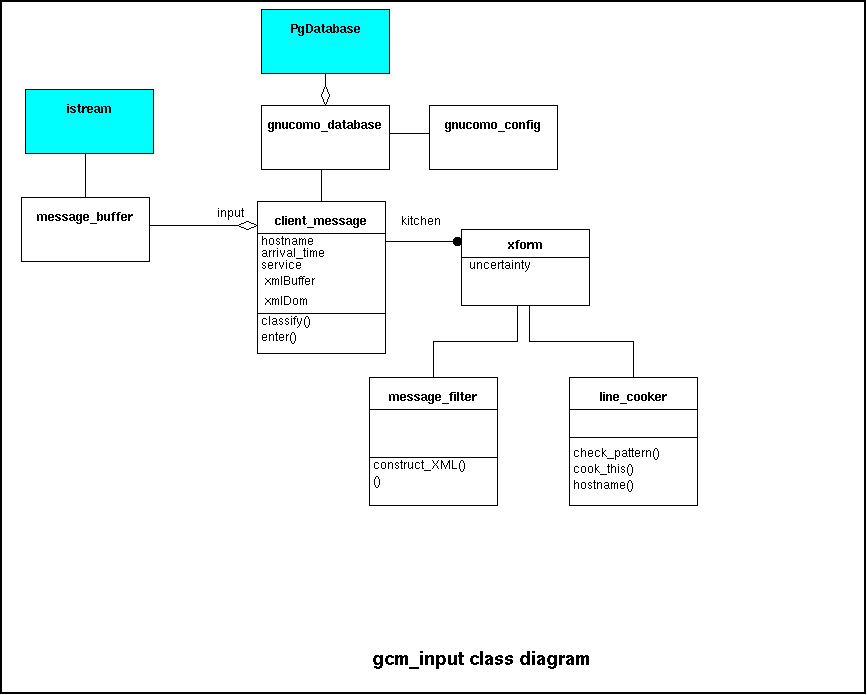

The figure below shows the class diagram that is used for gcm_input:

The heart of the application is a client_message object.

This object reads the message through the

message_buffer from some

input stream (file, string, stdin or socket), classifies the message and

enters information from the message into the database.

The client_message object holds a collection of message_filter

and associated line_cooker objects.

The association is maintained in a xform object.

Note that several line_cooker can be associated with

with a single message_filter object.

For example, a system log or a web server log are processed in a similar

manner, i.e. each line is transformed into a <log> element.

The patterns of the individual lines, however are entirely different.

During the classification of the input data, one combination of a

message_filter and line_cooker is selected.

The classification process works by calculating the uncertainty with which

a line_cooker matches with the input data.

The one with the least uncertainty is selected.

3.3.3.1 client_message

The client_message has a relationship with a gnucomo_database object which

is an abstraction of the tables in the database.

These are the methods for the client_message class:

-

client_message::client_message(istream *in, gnucomo_database *db)

Constructor.

-

void add_cooker(line_cooker *lc, message_filter *mf)

Add another line_cooker object with the associated

message_filter object to the collection.

This initializes the uncertainty with which the line_cooker

is selected to 1.0.

-

double client_message::classify(String host, date arrival_d,

hour arrival_t, String serv)

Try to classify the message and return the certainty with which the class of the

message could be determined.

If the hostname, arrival time and service can not be extracted from the message,

use the arguments as default.

This will examine the first few lines of the input data

to select one of the message_filter with

associated line_cooker objects

from the collection built with the add_cooker method.

-

int enter()

Insert the message contents into the gnucomo database.

Returns the number of records inserted.

The input data from the message_buffer

is first transformed into an XML document (a strstream object)

by invoking the message_filter and line_cooker objects.

The XML document in the internal buffer is then parsed into an

XML DOM tree, using the Gnome XML parser.

The XML document may also be validated against an XML Schema definition.

After extracting and checking the <header> elements,

the data nodes are extracted and inserted into the database,

possibly using a line_cooker object to cook raw

log elements.

If an error occurs in some stage of this process, the XML document

is dumped in a spool area for later processing.

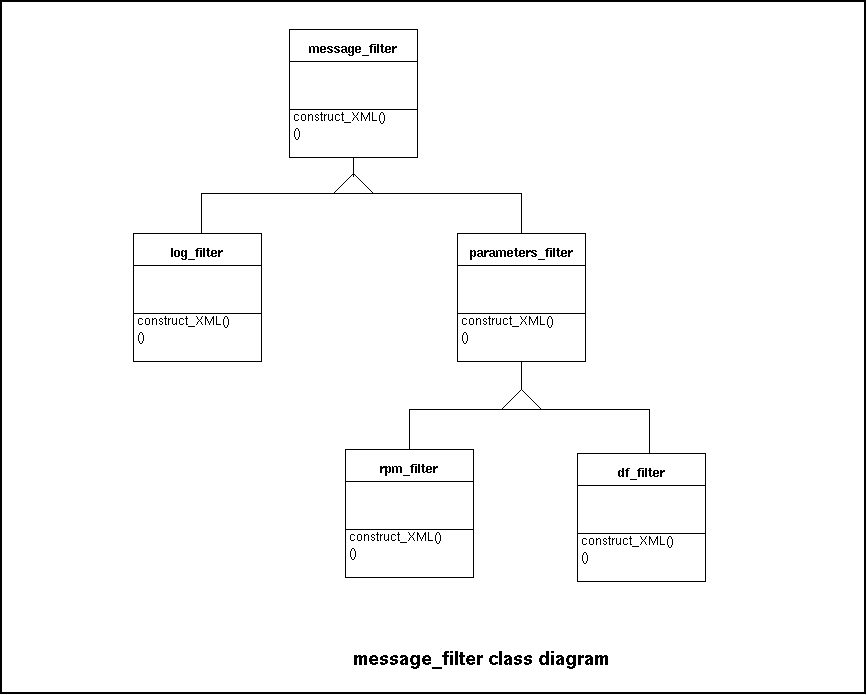

3.3.3.2 message_filter

A message_filter transforms the raw input data into an

XML document suitable for further parsing and storage into the database.

The base class, message_filter does nothing but copy the

input stream into an internal XML buffer.

An object of this class is used when the input is already in XML format.

Classes derived from message_filter read the input line by line,

possibly extracting information from an email header if available.

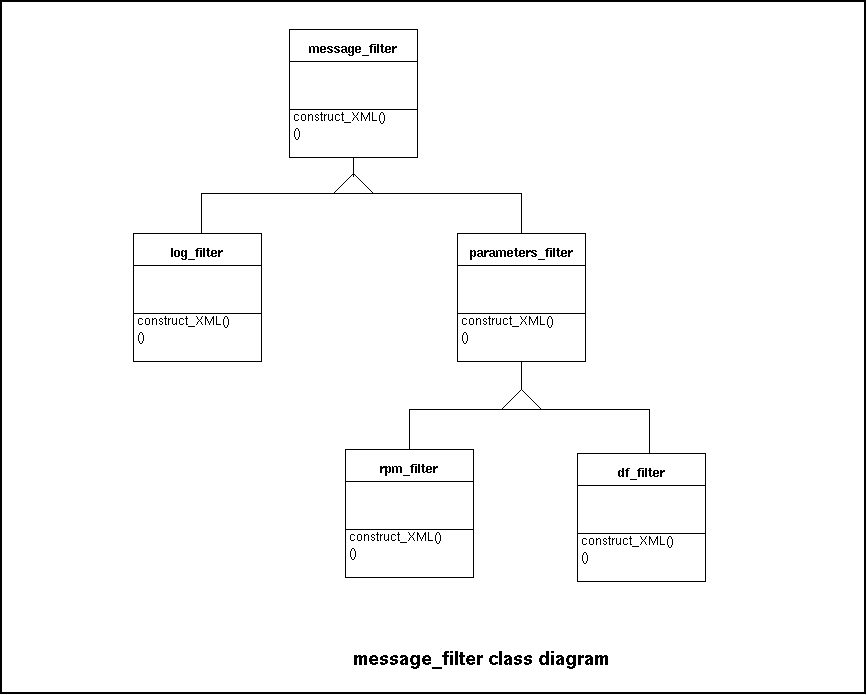

Classes derived from message_filter transform various kinds

of input into an XML document. The following diagram shows some examples:

The two classes, derived directly from message_filter reflect

that gcm_input can handle two kinds of input: The

log_filter for log files

and parameters_filter for parameter reports.

Each of these types of input are transformed into entirely different XML

documents and are stored quite differently into the database.

Classes that are derived further down the hierarchy will handle more specific

forms of input.

3.3.3.3 line_cooker

To turn a raw line from a log file onto separate parts that can be stored

in the database, i.e. parse the line, the client_message

object uses a line_cooker object.

This is a polymorphic object, so each type of log can have its own parser,

while the client_message object uses a common interface

for each one.

For each message, one specific line_cooker object is

selected as determined by the message type.

E.g., the derived class syslog_cooker is used for system logs.

When the client_message object encounters a raw

log element, it takes the following steps to turn this into a

cooked log element:

-

Remove the TEXT node of the "raw" element and save its content.

-

Change the name of the element into "cooked".

-

Check if the content matches the syntax of the type of log we're

processing at the moment.

This depends on the message type and is therefore a task for the

line_cooker object.

-

Have the line_cooker parse the content and extract

the timestamp and optionally the hostname and service.

-

Insert new child elements into the cooked element.

After that, the cooked element is ready for further processing

and possibly storing into the database.

The line_cooker base class holds three protected members

that must be filled with infomation by the derived classes:

-

UTC ts : the timestamp.

-

String hn : the hostname

-

String srv : the service

Corresponding base class methods (timestamp,

hostname and service) will do

nothing more than return these values.

It is up to the derived class's cook_this method

to properly initialize these members.

The methods for the line_cooker class are:

-

bool line_cooker::check_pattern(String logline)

Tries to match the logline against that patterns

that describe the message syntax.

In the line_cooker base class, this is a pure virtual function.

Returns true if the log line adheres to the message-specific syntax.

-

bool line_cooker::cook_this(String logline, UTC arrival)

Extracts information from the logline.

The arrival can be used to make corrections to incomplete

time stamps in the log file.

The implementation in a derived class must properly initialize the protected

members described above.

In the line_cooker base class, this is a pure virtual function.

Returns false if the pattern does not match.

-

String line_cooker::message_type()

Return the message type for which this line cooker is intended.

-

UTC line_cooker::timestamp()

Returns the timestamp that was previously extracted from the log line

or a 'null' timestamp if that information could nnot be extracted.

-

String line_cooker::hostname()

Returns the hostname that was previously extracted from the log line,

or an empty string if that information could not be extracted.

-

String line_cooker::service()

Returns the service that was previously extracted from the log line,

or an empty string if that information could not be extracted.

3.3.3.4 message_buffer

Some kind of input buffering is needed when a client message is being processed.

The contents of the message are not entirely clear until a few lines are analyzed,

and these lines probably need to be read again.

When the message is stored in a file, this is no problem; a simple lseek allows us

to read any line over and over again.

However, when the message comes from an input stream, like a TCP socket or just

plain old stdin, this is a different matter.

Lines of the messages that are already read will be lost forever, unless they are

stored somewhere.

To store an input stream temporarily, there are two options:

- In an internal memory buffer.

- In an external (temporary) file.

The message_buffer class takes care of the input buffering, thus

hiding these implementation details.

On the outside, a message_buffer can be read line by line until the

end of the input is reached.

Lines of input can be read again by backing up to the beginning of the message

by using the rewind() method or by backing up one line

with the -- operator.

The message_buffer object maintains a pointer to the next

available line.

The ++ operator, being the opposite of the --

operator, skips one line.

The >> operator reads data from the message

into the second (String) operand, just like the >>

operator for an istream.

There is a small difference, though.

The >> operator for a message_buffer

returns a boolean value which states if there actually was input available.

This value will usually turn to false at the end of file.

A second difference is the fact that input data can only be read into

String objects a line at a time.

There are no functions for reading integer or floating point numbers.

The >> operator reads the next line either from

an internal buffer or from the external input stream if the internal

buffer is exhausted.

Lines read from the input stream are cached in the internal buffer,

so they are available for reading another time, e.g. after

rewinding to the beginning of the message.

Methods for the message_buffer class:

- message_buffer::message_buffer(istream *in)

Constructor.

- bool operator >>(message_buffer &, String &)

- message_buffer::rewind()

- message_buffer::operator --

- message_buffer::operator ++

3.3.4 Command arguments

Gcm_input understands the following command line arguments:

- -c <name> : Configuration name

- -d <date> : Arrival time of the message

- -h <hostname> : FQDN of the client

- -s <service> : service that created the log

- -v : verbose output. Print lots of debug information

- -V : Print version and exit

3.4 gcm_daemon

Gcm_daemon is the application that processes data just

arrived in the database.

It handles the log-information delivered by gcm_input

in the log table of the database.

With the data further storage and classification can be done.

Where gcm_input is a highly versatile application that is

loaded and ended all the time the daemon is continously available monitoring

the entire system. Basically the daemon monitors everything that happens

in the database and excecutes continous checks and processes all the data.

The two applications (gcm_input and gcm_daemon) together are the core of the central system.

The application has the following tasks:

- Processing data into other tables so that easy detection can take place

- Raising notifications based on the available input

- Maintain the status of notifications and changing priority when needed

- Priodically perform checks for alerts that are communicated through the notification-table

- Perform updates on the database when a new version of the software is loaded

3.4.1 Performing checks

One of the most difficult tasks for the daemon is performing the automatic checks.

Every check is different and will be made up of several parameters that have to test negative.

That makes it hard to define this in software.

Another downside is that some work may be very redundant.

For that reason a more generic control structure is needed based on the technologies used.

The logical choice is then to focus on the capabilities in the database and perform

the job by executing queries.

Since the system is about detecting problems and issues we build the detection in

such a way that queries on the database result in 'suspicious' logrecords.

So called 'innocent' records can be ignored. So if a query gives a result a

problem is present, if there is no result there isn't a problem.

As soon as we seek for common ground in the process of identifying problems

it can be said that all results are based on the log-table

(as stated in the manifest the log-table is the one and only table were input

will arrive and stored for later use).

Furthermore there are two ways of determining if a problem is present:

-

A single log-record or a group of log-records is within or outside the boundaries set.

If it is outside the boundaries the logrecord(s) is/are a potential problem.

If there are more boundaries set all of these need to be applied.

Based on fixed data results can be derived.

-

A set of records outline a trend that throughout time may turnout

to be a problem. These type of values are not fixed and directly legible

but more or less derived data. That data is input for some checks (previous bullet).

In both cases a set of queries can be run.

If there are more queries to be executed the later queries can be executed on

only the results. For that reason intermediate results have to be stored in a

temporary table for later reuse.

Saving a session in combination with the found log-records are sufficient.

This is also true since logrecords are the basis of all derived presences in

the numerous log_adv_xxx-tables and always have a reference to the log-table.

Building the checks will thus be nothing more than combining queries

and adding a classification to the results of that query.

If this generic structure is being built properly with a simple (easy to understand) interface,

many combinations can be made. People having a logically correct idea,

but insufficient skills to program will be able to build checks.

As a consequence we can offer the interface to the user,

that in turn can also make particular checks for the environment that is unique to him/her.

This - of course - doesn't mean that a clear SQL-interface shouldn't be offered.

Whenever something happens, that is less than standard a line will be written to the syslogd.

This will enable users and developpers to trace exactly what happened.

The gcm_daemon will also log startup and ending so that abrupt endings of the daemon will be detected.

3.4.2 The initial process

When gcm_daemon starts first some basic actions take place that go beyond

just opening a connection to the database. The following actions also need to take place:

-

Check the database version if it is still the most recent version.

The daemon will check the version-number of the database.

If the database is not the same version as gcm_daemon an update will be performed.

When the database is up-to-date normal processing will continue.

-

If the database reflects that the used version gcm_daemon is less recent than

the running version (i.e. a new version has been installed) all records

in the log-table that weren't recognized before will now be set to unprocessed since

there is a fair change that they might be recognized this time. This will ensure that no data is lost.

3.5 Design ideas

Use of a neural network to analyze system logs:

- Classify words

- Classify message based on word classification